Facebook Fighting Misinformation

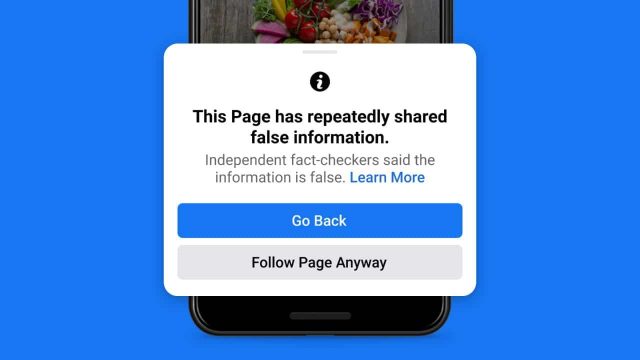

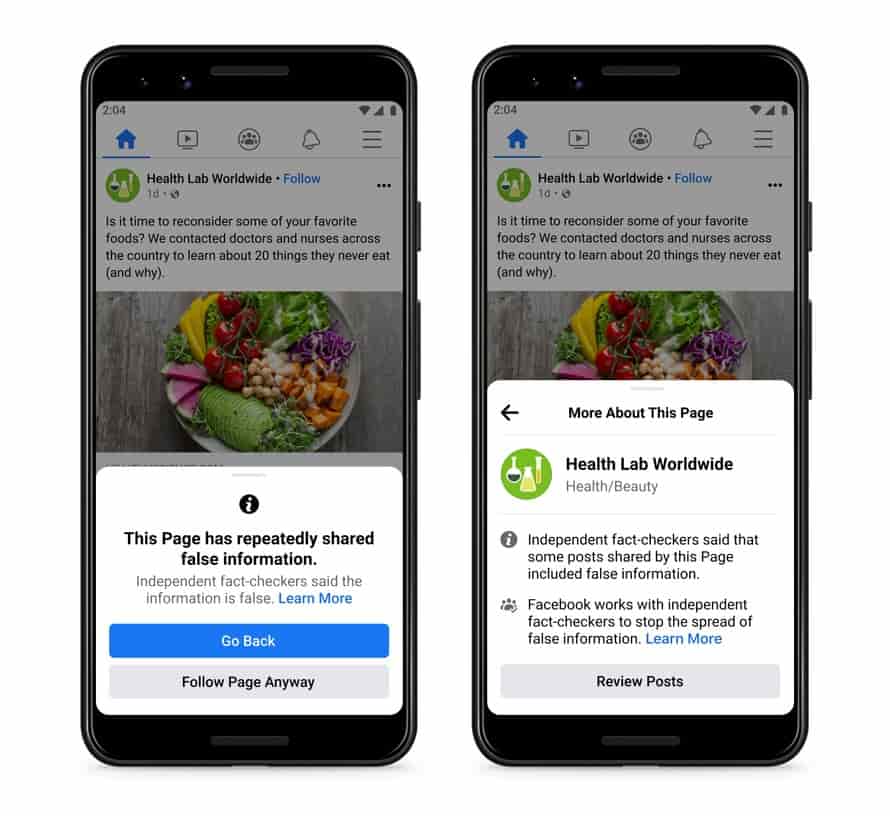

Social platforms like Facebook have long been plagued with misinformation spread by users, who do it intentionally or unintentionally. Many court rulings and law enforcement agencies have ordered that it’s the responsibility of Facebook to stop such misinformation from spreading. This led the company to introduce new warning tools like popups, which appear whenever a new user is following a page that’s market for spreading misinformation. Further, any controversial posts being made by a user will now have a warning that their posts will be downranked in the News Feed, and also a tag for fact-checking is set in. Down-ranking unverified posts make them less visible to the community, thus reducing the implications from it. A similar fact-checking system was introduced by Facebook last year, after the rise of misinformation posts on COVID-19, presidential elections, and COVID-19 vaccines. Users following the unverified data can result in adverse situations, thus should be controlled. Similar tools were introduced by Twitter, Instagram and others to fight the misinformation. They had set fact-checking labels and warning pop-ups for posts sharing controversial posts. Introducing these tools, Facebook said, “Whether it’s false or misleading content about COVID-19 and vaccines, climate change, elections or other topics, we’re making sure fewer people see misinformation on our apps.“